Article Purpose

This article illustrates and provides a discussion and implementation of Image Oil Painting Filters and related Image Cartoon Filters.

Sunflower: Oil Painting, Filter 5, Levels 30, Cartoon Threshold 30

Sample Source Code

This article is accompanied by a sample source code Visual Studio project which is available for download here.

Using the Sample Application

A sample application accompanies this article. The sample application creates a visual implementation of the concepts discussed throughout this article. Source/input images can be selected from the local file system and if desired filter result images can be saved to the local file system.

The two main types of functionality exposed by the sample application can be described as Image Oil Painting Filters and Image Cartoon Filters. The user interface provides the following user input options:

- Filter Size – The number of neighbouring pixels used in calculating each individual pixel value in regards to an Oil Painting Filter. Higher Filter sizes relate to a more intense Oil Painting Filter being applied. Lower Filter sizes relate to less intense Oil Painting Filters being applied.

- Intensity Levels – Represents the number of Intensity Levels implemented when applying an Oil Painting Filter. Higher values result in a broader range of colour intensities forming part of the result image. Lower values will reduce the range of colour intensities forming part of the result image.

- Cartoon Filter – A Boolean value indicating whether or not in addition to an Oil Painting Filter if a Cartoon Filter should also be applied.

- Threshold – Only applicable when applying a Cartoon Filter. This option represents the threshold value implemented in determining whether a pixel forms part of an Image Edge. Lower Values result in more image edges being highlighted. Higher values result in less image edges being highlighted.

The following image is screenshot of the Oil Painting Cartoon Filter sample application in action:

Rose: Oil Painting, Filter 15, Levels 10

Image Oil Painting Filter

The Image Oil Painting Filter consists of two main components: colour gradients and pixel colour intensities. As implied by the title when implementing this image filter resulting images are similar in appearance to images of Oil Paintings. Result images express a lesser degree of image detail when compared to source/input images. This filter also tends to output images which appear to have smaller colour ranges.

Four steps are required when implementing an Oil Painting Filter, indicated as follows:

- Iterate each pixel – Every pixel forming part of the source/input image should be iterated. When iterating a pixel determine the neighbouring pixel values based on the specified filter size/filter range.

- Calculate Colour Intensity - Determine the Colour Intensity of each pixel being iterated and that of the neighbouring pixels. The neighbouring pixels included should extend to a range determined by the Filter Size specified. The calculated value should be reduced in order to match a value ranging from zero to the number of Intensity Levels specified.

- Determine maximum neighbourhood colour intensity – When calculating the colour intensities of a pixel neighbourhood determine the maximum intensity value. In addition, record the occurrence of each intensity level and sum each of the Red, Green and Blue pixel colour component values equating to the same intensity level.

- Assign the result pixel – The value assigned to the corresponding pixel in the resulting image equates to the pixel colour sum total, where those pixels expressed the same intensity level. The sum total should be averaged by dividing the colour sum total by the intensity level occurrence.

Roses: Oil Painting, Filter 11, Levels 60, Cartoon Threshold 80

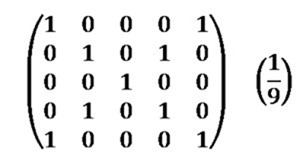

When calculating colour intensity reduced to fit the number of levels specified the algorithm implemented can be expressed as follows:

In the algorithm listed above the variables implemented can be explained as follows:

- I – Intensity: The calculated intensity value.

- R – Red: The value of a pixel’s Red colour component.

- G – Green: The value of a pixel’s Green colour component.

- B – Blue: The value of a pixel’s Blue colour component.

- l – Number of intensity levels: The maximum number of intensity levels specified.

Rose: Oil Painting, Filter 15, Levels 30

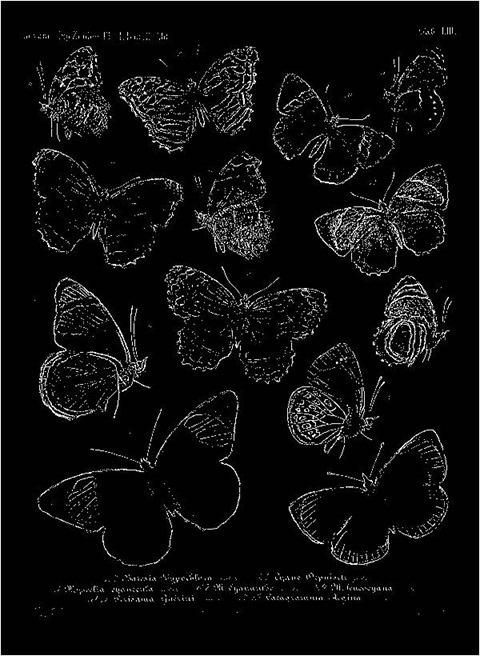

Cartoon Filter implementing Edge Detection

A Cartoon Filter effect can be achieved by combining an Image Oil Painting filter and an Edge Detection Filter. The Oil Painting filter has the effect of creating more gradual image colour gradients, in other words reducing image edge intensity.

The steps required in implementing a Cartoon filter can be listed as follows:

- Apply Oil Painting filter – Applying an Oil Painting Filter creates the perception of result images having been painted by hand.

- Implement Edge Detection – Using the original source/input image create a new binary image detailing image edges.

- Overlay edges on Oil Painting image – Iterate each pixel forming part of the edge detected image. If the pixel being iterated forms part of an edge, the related pixel in the Oil Painting filtered image should be set to black. Because the edge detected image was created as a binary image, a pixel forms part of an edge should that pixel equate to white.

Daisy: Oil Painting, Filter 7, Levels 30, Cartoon Threshold 40

In the sample source code edge detection has been implemented through Gradient Based Edge Detection. This method of edge detection compares the difference in colour gradients between a pixel’s neighbouring pixels. A pixel forms part of an edge if the difference in neighbouring pixel colour values exceeds a specified threshold value. The steps involved in Gradient Based Edge Detection as follows:

- Iterate each pixel – Each pixel forming part of a source/input image should be iterated.

- Determine Horizontal and Vertical Gradients – Calculate the colour value difference between the currently iterated pixel’s left and right neighbour pixel as well as the top and bottom neighbour pixel. If the gradient exceeds the specified threshold continue to step 8.

- Determine Horizontal Gradient – Calculate the colour value difference between the currently iterated pixel’s left and right neighbour pixel. If the gradient exceeds the specified threshold continue to step 8.

- Determine Vertical Gradient – Calculate the colour value difference between the currently iterated pixel’s top and bottom neighbour pixel. If the gradient exceeds the specified threshold continue to step 8.

- Determine Diagonal Gradients – Calculate the colour value difference between the currently iterated pixel’s North-Western and South-Eastern neighbour pixel as well as the North-Eastern and South-Western neighbour pixel. If the gradient exceeds the specified threshold continue to step 8.

- Determine NW-SE Gradient – Calculate the colour value difference between the currently iterated pixel’s North-Western and South-Eastern neighbour pixel. If the gradient exceeds the specified threshold continue to step 8.

- Determine NE-SW Gradient – Calculate the colour value difference between the currently iterated pixel’s North-Eastern and South-Western neighbour pixel.

- Determine and set result pixel value – If any of the six gradients calculated exceeded the specified threshold value set the related pixel in the resulting image to white, if not, set the related pixel to black.

Rose: Oil Painting, Filter 9, Levels 30

Implementing an Oil Painting Filter

The sample source code defines the OilPaintFilter method, an extension method targeting the Bitmap class. This method determines the maximum colour intensity from a pixel’s neighbouring pixels. The definition detailed as follows:

public static Bitmap OilPaintFilter(this Bitmap sourceBitmap, int levels, int filterSize) { BitmapData sourceData = sourceBitmap.LockBits(new Rectangle(0, 0, sourceBitmap.Width, sourceBitmap.Height), ImageLockMode.ReadOnly, PixelFormat.Format32bppArgb);

byte[] pixelBuffer = new byte[sourceData.Stride * sourceData.Height];

byte[] resultBuffer = new byte[sourceData.Stride * sourceData.Height];

Marshal.Copy(sourceData.Scan0, pixelBuffer, 0, pixelBuffer.Length);

sourceBitmap.UnlockBits(sourceData);

int[] intensityBin = new int [levels]; int[] blueBin = new int [levels]; int[] greenBin = new int [levels]; int[] redBin = new int [levels];

levels = levels - 1;

int filterOffset = (filterSize - 1) / 2; int byteOffset = 0; int calcOffset = 0; int currentIntensity = 0; int maxIntensity = 0; int maxIndex = 0;

double blue = 0; double green = 0; double red = 0;

for (int offsetY = filterOffset; offsetY < sourceBitmap.Height - filterOffset; offsetY++) { for (int offsetX = filterOffset; offsetX < sourceBitmap.Width - filterOffset; offsetX++) { blue = green = red = 0;

currentIntensity = maxIntensity = maxIndex = 0;

intensityBin = new int[levels + 1]; blueBin = new int[levels + 1]; greenBin = new int[levels + 1]; redBin = new int[levels + 1];

byteOffset = offsetY * sourceData.Stride + offsetX * 4;

for (int filterY = -filterOffset; filterY <= filterOffset; filterY++) { for (int filterX = -filterOffset; filterX <= filterOffset; filterX++) { calcOffset = byteOffset + (filterX * 4) + (filterY * sourceData.Stride);

currentIntensity = (int )Math.Round(((double) (pixelBuffer[calcOffset] + pixelBuffer[calcOffset + 1] + pixelBuffer[calcOffset + 2]) / 3.0 * (levels)) / 255.0);

intensityBin[currentIntensity] += 1; blueBin[currentIntensity] += pixelBuffer[calcOffset]; greenBin[currentIntensity] += pixelBuffer[calcOffset + 1]; redBin[currentIntensity] += pixelBuffer[calcOffset + 2];

if (intensityBin[currentIntensity] > maxIntensity) { maxIntensity = intensityBin[currentIntensity]; maxIndex = currentIntensity; } } }

blue = blueBin[maxIndex] / maxIntensity; green = greenBin[maxIndex] / maxIntensity; red = redBin[maxIndex] / maxIntensity;

resultBuffer[byteOffset] = ClipByte(blue); resultBuffer[byteOffset + 1] = ClipByte(green); resultBuffer[byteOffset + 2] = ClipByte(red); resultBuffer[byteOffset + 3] = 255; } }

Bitmap resultBitmap = new Bitmap(sourceBitmap.Width, sourceBitmap.Height);

BitmapData resultData = resultBitmap.LockBits(new Rectangle(0, 0, resultBitmap.Width, resultBitmap.Height), ImageLockMode.WriteOnly, PixelFormat.Format32bppArgb);

Marshal.Copy(resultBuffer, 0, resultData.Scan0, resultBuffer.Length);

resultBitmap.UnlockBits(resultData);

return resultBitmap; }

Rose: Oil Painting, Filter 7, Levels 20, Cartoon Threshold 20

Implementing a Cartoon Filter using Edge Detection

The sample source code defines the CheckThreshold method. The purpose of this method to determine the difference in colour between two pixels. In addition this method compares the colour difference and the specified threshold value. The following code snippet provides the implementation:

private static bool CheckThreshold(byte[] pixelBuffer, int offset1, int offset2, ref int gradientValue, byte threshold, int divideBy = 1) { gradientValue += Math.Abs(pixelBuffer[offset1] - pixelBuffer[offset2]) / divideBy;

gradientValue += Math.Abs(pixelBuffer[offset1 + 1] - pixelBuffer[offset2 + 1]) / divideBy;

gradientValue += Math.Abs(pixelBuffer[offset1 + 2] - pixelBuffer[offset2 + 2]) / divideBy;

return (gradientValue >= threshold); }

Rose: Oil Painting, Filter 13, Levels 15

The GradientBasedEdgeDetectionFilter method has been defined as an extension method targeting the Bitmap class. This method iterates each pixel forming part of the source/input image. Whilst iterating pixels the GradientBasedEdgeDetectionFilter extension method determines if the colour gradients in various directions exceeds the specified threshold value. A pixel is considered as part of an edge if a colour gradient exceeds the threshold value. The implementation as follows:

public static Bitmap GradientBasedEdgeDetectionFilter( this Bitmap sourceBitmap, byte threshold = 0) { BitmapData sourceData = sourceBitmap.LockBits(new Rectangle (0, 0, sourceBitmap.Width, sourceBitmap.Height), ImageLockMode.ReadOnly, PixelFormat.Format32bppArgb);

byte[] pixelBuffer = new byte[sourceData.Stride * sourceData.Height]; byte[] resultBuffer = new byte[sourceData.Stride * sourceData.Height];

Marshal.Copy(sourceData.Scan0, pixelBuffer, 0, pixelBuffer.Length); sourceBitmap.UnlockBits(sourceData);

int sourceOffset = 0, gradientValue = 0; bool exceedsThreshold = false;

for(int offsetY = 1; offsetY < sourceBitmap.Height - 1; offsetY++) { for(int offsetX = 1; offsetX < sourceBitmap.Width - 1; offsetX++) { sourceOffset = offsetY * sourceData.Stride + offsetX * 4; gradientValue = 0; exceedsThreshold = true ;

// Horizontal Gradient CheckThreshold(pixelBuffer, sourceOffset - 4, sourceOffset + 4, ref gradientValue, threshold, 2); // Vertical Gradient exceedsThreshold = CheckThreshold(pixelBuffer, sourceOffset - sourceData.Stride, sourceOffset + sourceData.Stride, ref gradientValue, threshold, 2);

if (exceedsThreshold == false ) { gradientValue = 0;

// Horizontal Gradient exceedsThreshold = CheckThreshold(pixelBuffer, sourceOffset - 4, sourceOffset + 4, ref gradientValue, threshold);

if (exceedsThreshold == false ) { gradientValue = 0; // Vertical Gradient exceedsThreshold = CheckThreshold(pixelBuffer, sourceOffset - sourceData.Stride, sourceOffset + sourceData.Stride, ref gradientValue, threshold);

if (exceedsThreshold == false ) { gradientValue = 0; // Diagonal Gradient : NW-SE CheckThreshold(pixelBuffer, sourceOffset - 4 - sourceData.Stride, sourceOffset + 4 + sourceData.Stride, ref gradientValue, threshold, 2); // Diagonal Gradient : NE-SW exceedsThreshold = CheckThreshold(pixelBuffer, sourceOffset - sourceData.Stride + 4, sourceOffset - 4 + sourceData.Stride, ref gradientValue, threshold, 2);

if (exceedsThreshold == false ) { gradientValue = 0; // Diagonal Gradient : NW-SE exceedsThreshold = CheckThreshold(pixelBuffer, sourceOffset - 4 - sourceData.Stride, sourceOffset + 4 + sourceData.Stride, ref gradientValue, threshold);

if (exceedsThreshold == false ) { gradientValue = 0; // Diagonal Gradient : NE-SW exceedsThreshold = CheckThreshold(pixelBuffer, sourceOffset - sourceData.Stride + 4, sourceOffset + sourceData.Stride - 4, ref gradientValue, threshold); } } } } }

resultBuffer[sourceOffset] = (byte)(exceedsThreshold ? 255 : 0); resultBuffer[sourceOffset + 1] = resultBuffer[sourceOffset]; resultBuffer[sourceOffset + 2] = resultBuffer[sourceOffset]; resultBuffer[sourceOffset + 3] = 255; } }

Bitmap resultBitmap = new Bitmap(sourceBitmap.Width, sourceBitmap.Height);

BitmapData resultData = resultBitmap.LockBits(new Rectangle (0, 0, resultBitmap.Width, resultBitmap.Height), ImageLockMode.WriteOnly, PixelFormat.Format32bppArgb);

Marshal.Copy(resultBuffer, 0, resultData.Scan0, resultBuffer.Length); resultBitmap.UnlockBits(resultData);

return resultBitmap; }

Rose: Oil Painting, Filter 7, Levels 20, Cartoon Threshold 20

The CartoonFilter extension method serves to combine images generated by the OilPaintFilter and GradientBasedEdgeDetectionFilter methods. The CartoonFilter method being defined as an extension method targets the Bitmap class. In this method pixels detected as forming part of an edge are set to black in Oil Painting filtered images. The definition as follows:

public static Bitmap CartoonFilter(this Bitmap sourceBitmap, int levels, int filterSize, byte threshold) { Bitmap paintFilterImage = sourceBitmap.OilPaintFilter(levels, filterSize);

Bitmap edgeDetectImage = sourceBitmap.GradientBasedEdgeDetectionFilter(threshold);

BitmapData paintData = paintFilterImage.LockBits(new Rectangle (0, 0, paintFilterImage.Width, paintFilterImage.Height), ImageLockMode.ReadOnly, PixelFormat.Format32bppArgb);

byte[] paintPixelBuffer = new byte[paintData.Stride * paintData.Height];

Marshal.Copy(paintData.Scan0, paintPixelBuffer, 0, paintPixelBuffer.Length);

paintFilterImage.UnlockBits(paintData);

BitmapData edgeData = edgeDetectImage.LockBits(new Rectangle (0, 0, edgeDetectImage.Width, edgeDetectImage.Height), ImageLockMode.ReadOnly, PixelFormat.Format32bppArgb);

byte[] edgePixelBuffer = new byte[edgeData.Stride * edgeData.Height];

Marshal.Copy(edgeData.Scan0, edgePixelBuffer, 0, edgePixelBuffer.Length);

edgeDetectImage.UnlockBits(edgeData);

byte[] resultBuffer = new byte [edgeData.Stride * edgeData.Height];

for(int k = 0; k + 4 < paintPixelBuffer.Length; k += 4) { if (edgePixelBuffer[k] == 255 || edgePixelBuffer[k + 1] == 255 || edgePixelBuffer[k + 2] == 255) { resultBuffer[k] = 0; resultBuffer[k + 1] = 0; resultBuffer[k + 2] = 0; resultBuffer[k + 3] = 255; } else { resultBuffer[k] = paintPixelBuffer[k]; resultBuffer[k + 1] = paintPixelBuffer[k + 1]; resultBuffer[k + 2] = paintPixelBuffer[k + 2]; resultBuffer[k + 3] = 255; } }

Bitmap resultBitmap = new Bitmap(sourceBitmap.Width, sourceBitmap.Height);

BitmapData resultData = resultBitmap.LockBits(new Rectangle (0, 0, resultBitmap.Width, resultBitmap.Height), ImageLockMode.WriteOnly, PixelFormat.Format32bppArgb);

Marshal.Copy(resultBuffer, 0, resultData.Scan0, resultBuffer.Length);

resultBitmap.UnlockBits(resultData);

return resultBitmap; }

Rose: Oil Painting, Filter 9, Levels 25, Cartoon Threshold 25

Sample Images

This article features a number of sample images. All featured images have been licensed allowing for reproduction. The following image files feature a sample images:

- Iceberg Rose – Licensed under the Creative Commons Attribution-Share Alike 3.0 Unported license. Attribution: Stan Shebs. Download from Wikipedia

- Heidi Klum Rose – Licensed under the Creative Commons Attribution-Share Alike 3.0 Unported, 2.5 Generic, 2.0 Generic and 1.0 Generic license. Download from Wikipedia

- White Daisy – Licensed under the Creative Commons Attribution-Share Alike 3.0 Unported license. Download from Wikipedia.

- Amber Flush Rose – Licensed under the Creative Commons Attribution-Share Alike 3.0 Unported, 2.5 Generic, 2.0 Generic and 1.0 Generic license. Download from Wikipedia.

- Rose Bouquet – Licensed under the Creative Commons Attribution-Share Alike 3.0 Unported, 2.5 Generic, 2.0 Generic and 1.0 Generic license. Download from Wikipedia.

- Sunflower – Is in the public domain in the United States because it is a work prepared by an officer or employee of the United States Government as part of that person’s official duties under the terms of Title 17, Chapter 1, Section 105 of the US Code. See Copyright. Download from Wikimedia.

- White Rose – Has been released into the public domain by its author, Laitche. This applies worldwide. Download from Wikimedia.

Related Articles and Feedback

Feedback and questions are always encouraged. If you know of an alternative implementation or have ideas on a more efficient implementation please share in the comments section.

I’ve published a number of articles related to imaging and images of which you can find URL links here:

- C# How to: Image filtering by directly manipulating Pixel ARGB values

- C# How to: Image filtering implemented using a ColorMatrix

- C# How to: Blending Bitmap images using colour filters

- C# How to: Bitmap Colour Substitution implementing thresholds

- C# How to: Generating Icons from Images

- C# How to: Swapping Bitmap ARGB Colour Channels

- C# How to: Bitmap Pixel manipulation using LINQ Queries

- C# How to: Linq to Bitmaps – Partial Colour Inversion

- C# How to: Bitmap Colour Balance

- C# How to: Bi-tonal Bitmaps

- C# How to: Bitmap Colour Tint

- C# How to: Bitmap Colour Shading

- C# How to: Image Solarise

- C# How to: Image Contrast

- C# How to: Bitwise Bitmap Blending

- C# How to: Image Arithmetic

- C# How to: Image Convolution

- C# How to: Image Edge Detection

- C# How to: Difference Of Gaussians

- C# How to: Image Median Filter

- C# How to: Image Unsharp Mask

- C# How to: Image Colour Average

- C# How to: Image Erosion and Dilation

- C# How to: Morphological Edge Detection

- C# How to: Boolean Edge Detection

- C# How to: Gradient Based Edge Detection

- C# How to: Sharpen Edge Detection

- C# How to: Calculating Gaussian Kernels

- C# How to: Image Blur

- C# How to: Image Transform Rotate

- C# How to: Image Transform Shear

- C# How to: Compass Edge Detection

- C# How to: Oil Painting and Cartoon Filter

- C# How to: Stained Glass Image Filter